DYNAMIXEL in Research

A curated collection of peer-reviewed research, theses, and academic projects using DYNAMIXEL smart actuators.

CHILD (Controller for Humanoid Imitation and Live Demonstration):

a Whole-Body Humanoid Teleoperation System

SpeechCompass: Enhancing Mobile Captioning with Diarization

and Directional Guidance via Multi-Microphone Localization

Flying Hand: End-Effector-Centric Framework for Versatile Aerial

Manipulation Teleoperation and Policy Learning

Design of a low-cost and lightweight 6 DoF bimanual arm for dynamic and contact-rich manipulation

ORCA: An Open-Source, Reliable, Cost-Effective, Anthropomorphic Robotic Hand for Uninterrupted Dexterous Task Learning

Friction-Scaled Vibrotactile Feedback for Real-Time Slip Detection in Manipulation using Robotic Sixth Finger

Abstract

The integration of extra-robotic limbs/fingers to enhance and expand motor skills, particularly for grasping and manipulation, possesses significant challenges. The grasping performance of existing limbs/fingers is far inferior to that of human hands. Human hands can detect the onset of slip through tactile feedback originating from tactile receptors during the grasping process, enabling precise and automatic regulation of grip force. This grip force is scaled by the coefficient of friction between the contacting surface and the fingers. The frictional information is perceived by humans depending upon the slip happening between the finger and the object. This ability to perceive friction allows humans to apply just the right amount of force needed to maintain a secure grip, adjusting based on the weight of the object and the friction of the contact surface. Enhancing this capability in extra-robotic limbs or fingers used by humans is challenging. To address this challenge, this paper introduces a novel approach to communicate frictional information to users through encoded vibrotactile cues. These cues are conveyed on the onset of incipient slip thus allowing the users to perceive the friction and ultimately use this information to increase the force to avoid dropping of the object. In a 2-alternative forced-choice protocol, participants gripped and lifted a glass under three different frictional conditions, applying a normal force of 3.5 N. After reaching this force, the glass was gradually released to induce slip. During this slipping phase, vibrations scaled according to the static coefficient of friction were presented to users, reflecting the frictional conditions. The results suggested an accuracy of 94.53±3.05 (mean±SD) in perceiving frictional information upon lifting objects with varying friction. The results indicate the effectiveness of using vibrotactile feedback for sensory feedback, allowing users of extra-robotic limbs or fingers to perceive frictional information. This enables them to assess surface properties and adjust grip force according to the frictional conditions, enhancing their ability to grasp and manipulate objects more effectively. Powered By DYNAMIXEL.

SOC and Temperature Aware Battery Swapping for an E-Scooter Using a Robotic Arm

Abstract

The main contribution of this paper is the integration of a battery management system (BMS) to ensure safe battery operation and automated battery swapping for an electric scooter (e-scooter). The BMS constantly monitors the battery state of charge (SOC) and temperature, and initiates battery swapping under predefined conditions. This is crucial because the conventional BMS sometimes fails to detect early signs of potential issues, leading to safety hazards if not addressed promptly. Battery swapping stations are an effective solution, offering an alternative to traditional charging stations by addressing the issue of lengthy charging time. Also, this paper addresses the problem of frequent battery recharging, which limits e-scooters’ operational range. The proposed solution employs a robotic arm to execute battery swaps without human intervention. A computer vision system is utilized to detect an e-scooter’s battery, compensating for any tilt in a parked e-scooter to ensure accurate alignment, thereby enabling the robotic arm to efficiently plan and execute the battery swap. The proposed system requires minimal modifications to the existing e-scooter design by incorporating a specifically designed battery compartment thus offering significant improvements over manual swapping methods. Powered By DYNAMIXEL.

Exploring GPT-4 for Robotic Agent Strategy with Real-Time State Feedback and a Reactive Behaviour Framework

Vision-Ultrasound Robotic System based on Deep Learning for Gas and Arc Hazard Detection in Manufacturing

Abstract

Gas leaks and arc discharges present significant risks in industrial environments, requiring robust detection systems to ensure safety and operational efficiency. Inspired by human protocols that combine visual identification with acoustic verification, this study proposes a deep learning-based robotic system for autonomously detecting and classifying gas leaks and arc discharges in manufacturing settings. The system is designed to execute all experimental tasks (A, B, C, D) entirely onboard the robot without external computation, demonstrating its capability for fully autonomous operation. Utilizing a 112- channel acoustic camera operating at a 96 kHz sampling rate to capture ultrasonic frequencies, the system processes real-world datasets recorded in diverse industrial scenarios. These datasets include multiple gas leak configurations (e.g., pinhole, open end) and partial discharge types (Corona, Surface, Floating) under varying environmental noise conditions. The proposed system integrates YOLOv5 for visual detection and a beamforming-enhanced acoustic analysis pipeline. Signals are transformed using Short-Time Fourier Transform (STFT) and refined through Gamma Correction, enabling robust feature extraction. An Inception-inspired Convolutional Neural Network further classifies hazards, achieving an unprecedented 99% gas leak detection accuracy. The system not only detects individual hazard sources but also enhances classification reliability by fusing multi-modal data from both vision and acoustic sensors. When tested in reverberation and noise-augmented environments, the system outperformed conventional models by up to 44%p, with experimental tasks meticulously designed to ensure fairness and reproducibility. Additionally, the system is optimized for real-time deployment, maintaining an inference time of 2.1 seconds on a mobile robotic platform. By emulating human-like inspection protocols and integrating vision with acoustic modalities, this study presents an effective solution for industrial automation, significantly improving safety and operational reliability. Powered By DYNAMIXEL.

Prismatic-Bending Transformable (PBT) Joint for a Modular, Foldable Manipulator with Enhanced Reachability and Dexterity

Tendon-driven Grasper Design for Aerial Robot Perching on Tree Branches

Abstract— Protecting and restoring forest ecosystems has become an important conservation issue. Although various robots have been used for field data collection to protect forest ecosystems, the complex terrain and dense canopy make the data collection less efficient. To address this challenge, an aerial platform with bio-inspired behaviour facilitated by a bio-inspired mechanism is proposed. The platform spends minimum energy during data collection by perching on tree branches. A raptor inspired vision algorithm is used to locate a tree trunk, and then a horizontal branch on which the platform can perch is identified. A tendon-driven mechanism inspired by bat claws which requires energy only for actuation, secures the platform onto the branch using the mechanism’s passive compliance. Experimental results show that the mechanism can perform perching on branches ranging from 30 mm to 80 mm in diameter. The real-world tests validated the system’s ability to select and adapt to target points, and it is expected to be useful in complex forest ecosystems. Powered By DYNAMIXEL.

Closed-Loop Control and Disturbance Mitigation of an Underwater Multi-Segment Continuum Manipulator

BEHAVIOR ROBOT SUITE: Streamlining Real-World Whole-Body Manipulation for Everyday Household Activities

Synergy-based robotic quadruped leveraging passivity for natural intelligence and behavioural diversity

Autonomous Robotic Pepper Harvesting: Imitation Learning in Unstructured Agricultural Environments

Abstract— Automating tasks in outdoor agricultural fields poses significant challenges due to environmental variability, unstructured terrain, and diverse crop characteristics. We present a robotic system for autonomous pepper harvesting designed to operate in these unprotected, complex settings. Utilizing a custom handheld shear-gripper, we collected 300 demonstrations to train a visuomotor policy, enabling the system to adapt to varying field conditions and crop diversity. We achieved a success rate of 28.95% with a cycle time of 31.71 seconds, comparable to existing systems tested under more controlled conditions like greenhouses. Our system demonstrates the feasibility and effectiveness of leveraging imitation learning for automated harvesting in unstructured agricultural environments. This work aims to advance scalable, automated robotic solutions for agriculture in natural settings. Powered By DYNAMIXEL.

Loopy Movements: Emergence of Rotation in a Multicellular Robot

Abstract— Unlike most human-engineered systems, many biological systems rely on emergent behaviors from low-level interactions, enabling greater diversity and superior adaptation to complex, dynamic environments. This study explores emergent decentralized rotation in the Loopy multicellular robot, composed of homogeneous, physically linked, 1-degree-of-freedom cells. Inspired by biological systems like sunflowers, Loopy uses simple local interactions—diffusion, reaction, and active transport of simulated chemicals, called morphogens—without centralized

control or knowledge of its global morphology. Through these interactions, the robot self-organizes to achieve coordinated rotational motion and forms lobes—local protrusions created by clusters of motor cells. This study investigates how these interactions drive Loopy’s rotation, the impact of its morphology, and its resilience to actuator failures. Our findings reveal two distinct behaviors:

Inner valleys between lobes rotate fasterthan the outer peaks, contrasting with rigid body dynamics, and

Cells rotate in the opposite direction of the overall morphology.

The experiments show that while Loopy’s morphology does not

affect its angular velocity relative to its cells, larger lobes increase

cellular rotation and decrease morphology rotation relative to

the environment. Even with up to one-third of its actuators

disabled and significant morphological changes, Loopy maintains

its rotational abilities, highlighting the potential of decentralized,

bio-inspired strategies for resilient and adaptable robotic systems. Powered By DYNAMIXEL.

Soft Vision-Based Tactile-Enabled Sixth Finger: Advancing Daily Objects Manipulation for Stroke Survivors

Robotic System with Tactile-Enabled High-Resolution Hyperspectral Imaging Device for Autonomous Corn Leaf Phenotyping in Controlled Environments

Variable-Friction In-Hand Manipulation for Arbitrary Objects via Diffusion-Based Imitation Learning

Abstract— Dexterous in-hand manipulation (IHM) for arbitrary objects is challenging due to the rich and subtle contact process. Variable-friction manipulation is an alternative approach to dexterity, previously demonstrating robust and versatile 2D IHM capabilities with only two single-joint fingers. However, the hard-coded manipulation methods for variable friction hands are restricted to regular polygon objects and limited target poses, as well as requiring the policy to be tailored for each object. This paper proposes an end-to-end learning-based manipulation method to achieve arbitrary object manipulation for any target pose on real hardware, with minimal engineering efforts and data collection. The method features

a diffusion policy-based imitation learning method with cotraining from simulation and a small amount of real-world data. With the proposed framework, arbitrary objects including polygons and non-polygons can be precisely manipulated to reach arbitrary goal poses within 2 hours of training on an A100 GPU and only 1 hour of real-world data collection. The

precision is higher than previous customized object-specific policies, achieving an average success rate of 71.3% with average pose error being 2.676 mm and 1.902◦. Powered By DYNAMIXEL.

Global-Local Interface for On-Demand Teleoperation

Abstract: Teleoperation is a critical method for human-robot interface, holds significant potential for enabling robotic applications in industrial and unstructured environments. Existing teleoperation methods have distinct strengths and limitations in flexibility, range of workspace and precision. To fuse these advantages, we introduce the Global-Local (G-L) Teleoperation Interface. This interface decouples robotic teleoperation into global behavior, which ensures the robot motion range and intuitiveness, and local behavior, which enhances human operator’s dexterity and capability for performing fine tasks. The G-L interface enables efficient teleoperation not only for conventional tasks like pick-and-place, but also for challenging fine manipulation and large-scale movements. Based on the G-L interface, we constructed a single-arm and a dual-arm teleoperation system with different remote control devices, then demonstrated tasks requiring large motion range, precise manipulation or dexterous end-effector control. Extensive experiments validated the user-friendliness, accuracy, and generalizability of the proposed interface. Powered By DYNAMIXEL.

ExoKit: A Toolkit for Rapid Prototyping of Interactions for Arm-based Exoskeletons

Abstract - Exoskeletons open up a unique interaction space that seamlessly

integrates users’ body movements with robotic actuation. Despite

its potential, human-exoskeleton interaction remains an underexplored area in HCI, largely due to the lack of accessible prototyping tools that enable designers to easily develop exoskeleton designs and customized interactive behaviors. We present ExoKit, a do-it yourself toolkit for rapid prototyping of low-fidelity, functional exoskeletons targeted at novice roboticists. ExoKit includes modular hardware components for sensing and actuating shoulder and elbow joints, which are easy to fabricate and (re)configure for customized functionality and wearability. To simplify the programming of interactive behaviors, we propose functional abstractions that encapsulate high-level human-exoskeleton interactions. These can be readily accessed either through ExoKit’s command-line or graphical user interface, a Processing library, or microcontroller firmware, each targeted at different experience levels. Findings from implemented application cases and two usage studies demonstrate the versatility and accessibility of ExoKit for early-stage interaction design. Powered By DYNAMIXEL.

Embodied design for enhanced flipper-based locomotion in complex terrains

Abstract: Robots are becoming increasingly essential for traversing complex environments such as disaster areas, extraterrestrial terrains, and marine environments. Yet, their potential is often limited by mobility and adaptability constraints. In nature, various animals have evolved finely tuned designs and anatomical features that enable efficient locomotion in diverse environments. Sea turtles, for instance, possess specialized flippers that facilitate both long-distance underwater travel and adept maneuvers across a range of coastal terrains. Building on the principles of embodied intelligence and drawing inspiration from sea turtle hatchings, this paper examines the critical interplay between a robot’s physical form and its environmental interactions, focusing on how morphological traits and locomotive behaviors affect terrestrial navigation. We present a bioinspired robotic system and study the impacts of flipper/body morphology and gait patterns on its terrestrial mobility across diverse terrains ranging from sand to rocks. Evaluating key performance metrics such as speed and cost of transport, our experimental results highlight adaptive designs as crucial for multi-terrain robotic mobility to achieve not only speed and efficiency but also the versatility needed to tackle the varied and complex terrains encountered in real-world applications. Powered By DYNAMIXEL.

ALPHA-α and Bi-ACT Are All You Need: Importance of Position and Force Information/Control for Imitation Learning of Unimanual and Bimanual Robotic Manipulation with Low-Cost System

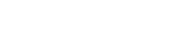

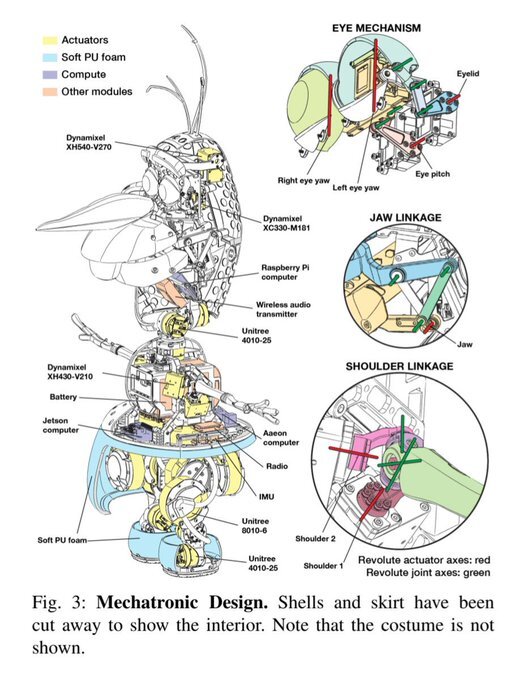

ToddlerBot: Open-Source ML-Compatible Humanoid Platform for Loco-Manipulation (Stanford University)

Abstract—Learning-based robotics research driven by data demands a new approach to robot hardware design—one that serves as both a platform for policy execution and a tool for embodied data collection to train policies. We introduce ToddlerBot, a low-cost, open-source humanoid robot platform designed for scalable policy learning and research in robotics and AI. ToddlerBot enables seamless acquisition of high-quality simulation and real-world data. The plug-and-play zero-point calibration and transferable motor system identification ensure a high-fidelity digital twin, enabling zero-shot policy transfer from simulation to the real-world. A user-friendly teleoperation interface facilitates streamlined real-world data collection for learning motor skills from human demonstrations.

Utilizing its data collection ability and anthropomorphic design, ToddlerBot

is an ideal platform to perform whole-body loco-manipulation. Additionally, ToddlerBot’s compact size (0.56 m, 3.4 kg) ensures safe operation in real-world environments. Reproducibility is achieved with an entirely 3D-printed, open-source design and commercially available components, keeping the total cost under 6000 USD. Comprehensive documentation allows assembly and maintenance with basic technical expertise, as validated by a successful independent replication of the system. We demonstrate ToddlerBot’s capabilities through arm span, payload, endurance tests, loco-manipulation tasks, and a collaborative long-horizon scenario where two robots tidy a toy session together. By advancing ML-compatibility, capability, and reproducibility, ToddlerBot provides a robust platform for scalable learning and dynamic policy execution in robotics research. Powered By DYNAMIXEL.

VMP Versatile Motion Priors for Robustly Tracking Motion on Physical Characters - Research Paper

Abstract:

Recent progress in physics-based character control has made it possible to learn policies from unstructured motion data. However, it remains challenging to train a single control policy that works with diverse and unseen motions, and can be deployed to real-world physical robots. In this paper, we propose a two-stage technique that enables the control of a character with a full-body kinematic motion reference, with a focus on imitation accuracy. In a first stage, we extract a latent space encoding by training a variational autoencoder, taking short windows of motion from unstructured data as input. We then use the embedding from the time-varying latent code to train a conditional policy in a second stage, providing a mapping from kinematic input to dynamics-aware output. By keeping the two stages separate, we benefit from self-supervised methods to get better latent codes and explicit imitation rewards to avoid mode collapse. We demonstrate the efficiency and robustness of our method in simulation, with unseen user-specified motions, and on a bipedal robot, where we bring dynamic motions to the real world. Powered By DYNAMIXEL.

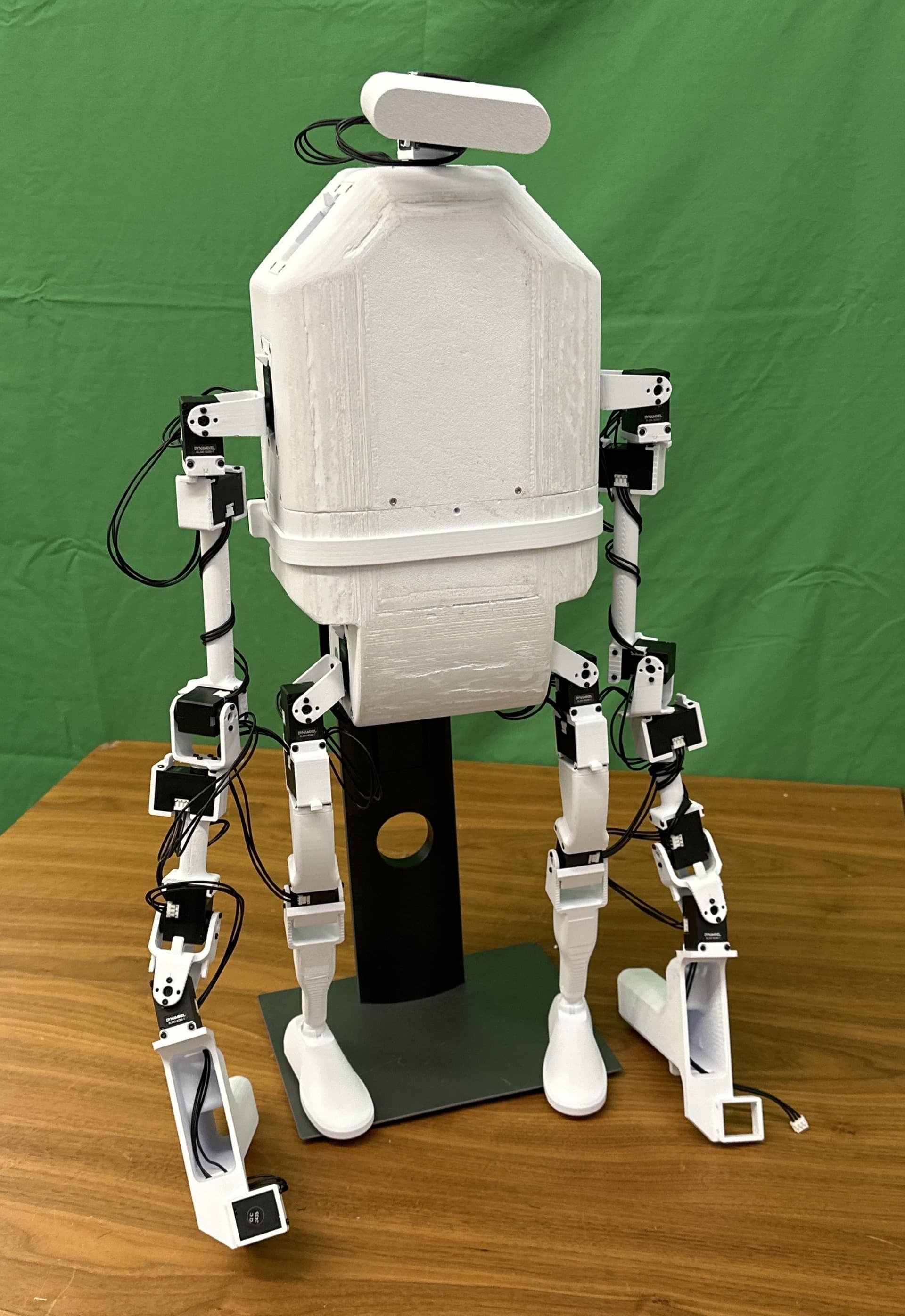

Design and Control of a Bipedal Robotic Character

Abstract

Legged robots have achieved impressive feats in dynamic locomotion in challenging unstructured terrain. However, in entertainment applications, the design and control of these robots face additional challenges in appealing to human audiences. This work aims to unify expressive, artist-directed motions and robust dynamic mobility for legged robots. To this end, we introduce a new bipedal robot, designed with a focus on character-driven mechanical features. We present a reinforcement learning-based control architecture to robustly execute artistic motions conditioned on command signals. During runtime, these command signals are generated by an animation engine which composes and blends between multiple animation sources. Finally, an intuitive operator interface enables real-time show performances with the robot. The complete system results in a believable robotic character, and paves the way for enhanced human-robot engagement in various contexts, in entertainment robotics and beyond. Powered By DYNAMIXEL.

Stanford University: Mobile ALOHA Learning Bimanual Mobile Manipulation with Low-Cost Whole-Body Teleoperation

LEAP Hand: Low-Cost, Efficient, and Anthropomorphic Hand for Robot Learning

Abstract

Dexterous manipulation has been a long-standing challenge in robotics. While machine learning techniques have shown some promise, results have largely been currently limited to simulation. This can be mostly attributed to the lack of suitable hardware. In this paper, we present LEAP Hand, a low-cost dexterous and anthropomorphic hand for machine learning research. In contrast to previous hands, LEAP Hand has a novel kinematic structure that allows maximal dexterity regardless of finger pose. LEAP Hand is low-cost and can be assembled in 4 hours at a cost of 2000 USD from readily available parts. It is capable of consistently exerting large torques over long durations of time. We show that LEAP Hand can be used to perform several manipulation tasks in the real world—from visual teleoperation to learning from passive video data and sim2real. LEAP Hand significantly outperforms its closest competitor Allegro Hand in all our experiments while being 1/8th of the cost. Powered By DYNAMIXEL.

Development of an inexpensive 3D clinostat and comparison with other microgravity simulators using Mycobacterium marinum

Google Deepmind - Learning Agile Soccer Skills for a Bipedal Robot with Deep Reinforcement Learning

Development of the Bento Arm: An Improved Robotic Arm for Myoelectric Training and Research

IEEE Transactions on Robotics: Lie Group Formulation and Sensitivity Analysis for Shape Sensing of Variable Curvature Continuum Robots With General String Encoder Routing

Abstract—

This article considers a combination of actuation tendons and

measurement strings to achieve accurate shape sensing and

direct kinematics of continuum robots. Assuming general string

routing, a methodical Lie group formulation for the

shape sensing of these robots is presented. The shape kinematics

is expressed using arc-length-dependent curvature distributions

parameterized by modal functions, and the Magnus expansion for

Lie group integration is used to express the shape as a product of

exponentials. The tendon and string length kinematic constraints

are solved for the modal coefficients and the configuration space

and body Jacobian are derived. The noise amplification index for

the shape reconstruction problem is defined and used for optimizing

the string/tendon routing paths, and a planar simulation study

shows the minimal number of strings/tendons needed for accurate

shape reconstruction. A torsionally stiff continuum segment is

used for experimental evaluation, demonstrating mean (maximal)

end-effector absolute position error of less than 2% (5%) of total

length. Finally, a simulation study of a torsionally compliant segment

demonstrates the approach for general deflections and string

routings. We believe that the methods of this article can benefit the

design process, sensing, and control of continuum and soft robots. Powered By DYNAMIXEL.

The Dragonfly Spectral Line Mapper: Design and First Light

ABSTRACT

The Dragonfly Spectral Line Mapper (DSLM) is the latest evolution of the Dragonfly Telephoto Array, which turns it into the world’s most powerful wide-field spectral line imager. The DSLM will be the equivalent of a 1.6m aperture f/0.26 refractor with a built-in Integral Field Spectrometer, covering a five square degree field of view. The new telescope is designed to carry out ultra-narrow bandpass imaging of the low surface brightness universe with exquisite control over systematic errors, including real-time calibration of atmospheric variations in airglow. The key to Dragonfly’s transformation is the “Filter-Tilter”, a mechanical assembly which holds ultra-narrow bandpass interference filters in front of each lens in the array and tilts them to smoothly shift their central wavelength. Here we describe our development process based on rapid prototyping, iterative design, and mass production. This process has resulted in numerous improvements to the design of the DSLM from the initial pathfinder instrument, including changes to narrower bandpass filters and the addition of a suite of calibration filters for continuum light subtraction and sky line monitoring. Improvements have also been made to the electronics and hardware of the array, which improve tilting accuracy, rigidity and light baffling. Here we present laboratory and on-sky measurements from the deployment of the first bank of lenses in May 2022, and a progress report on the completion of the full array in early 2023. Powered By DYNAMIXEL.

A comparison study on the dynamic control of OpenMANIPULATOR-X by PD with gravity compensation tuned by oscillation damping based on the phase-trajectory-length concept

An Affordable Robotic Arm Design for Manipulation in Tabletop Clutter

In this study, tabletop object manipulation in a cluttered environment using a robotic manipulator is considered. Our focus is on avoiding or manipulating other obstructions/objects to achive the given task. We propose an affordable robotic manipulator design with five degrees of freedom where the gripper constitutes one additional degree of freedom. The manipulator has approximately the same length as an adult human’s arm. An accurate simulation model for ROS/Gazebo along with a preliminary motion controller is also presented.

This academic paper features our DYNAMIXEL AX-12A, MX-28T, MX-64T, and MX-106T all-in-one smart actuators. Powered By DYNAMIXEL.

Design and Implementation of a Pair of Robot Arms with Bilateral Teleoperation

Abstract

Modern robotics has progressed in the manufacturing industry so that many manual labor tasks in assembly lines can be automated by robots with high speed and high positional accuracy. However, these robots typically cannot perform tasks that require perception or disturbance rejection. Humans are still needed in factories due to their innate ability to understand situations and react accordingly. Teleoperated robots can allow human perception to be combined with the dexterity and safety of a robot as long as the user interface and controls are carefully designed to avoid hindering the operator. Force feedback bilateral teleoperation is one method for providing users with an intuitive user interface and feedback. This thesis documents the design, construction, and implementation of a pair of bilateral teleoperated robotic forearms, each consisting of a 2 degree of freedom wrist, and a gripper. The forearm uses commercial off the shelf actuators in order to keep cost and additional development time low, while also testing the feasibility of using non-custom actuators. Development of the forearms included design and manufacturing of the mechanical assemblies, implementation of high-speed communication protocol, and tuning of control parameters. Powered By DYNAMIXEL.